The Green Sheet Online Edition

April 4, 2025 • 25:04:02

Model governance: The AI risk financials cannot overlook

Large language models (LLMs) are transforming financial services, bringing efficiency and innovation at an unprecedented scale. For example, JPMorgan Chase's LLM Suite is used by over 200,000 employees globally to enhance productivity and improve customer services.

BloombergGPT is a 50 billion parameter model that's widely effective in sentiment analysis, among other sophisticated tasks. The financial services industry is diving head first into the latest in LLM technologies. But with great power comes great risk. The challenge isn't just using LLMs—it's governing them effectively.

Regulators demand clear, demonstrable proof of a model's integrity. They ask:

- Is the model open source or proprietary? Regulators will require documentation that traces the model's origin, including licensing details, modification history and security assessments of its dependencies.

- Was it trained on biased or unverified data? Institutions must provide records of data provenance, bias audits and testing methodologies to prove the model does not introduce discriminatory outcomes.

- Can its outputs be explained and controlled? Financial institutions will need to implement model explainability techniques, such as LIME or SHAP, and demonstrate governance mechanisms that prevent unintended behaviors or hallucinations.

For decades, financial institutions have used models to set policies, such as predicting loan default rates or setting credit card interest rates. These models undergo rigorous validation to ensure they don't introduce bias or hidden risks. LLMs must now undergo the same scrutiny.

Just as banks stress-test risk models, LLMs require adversarial testing to ensure fairness, reliability and compliance.

Why LLM governance feels familiar

Despite the unique challenges LLMs introduce, their governance shares core principles with traditional model oversight. Banks have long been required to validate and document the models used in lending, risk management and fraud detection. These models must be explainable, auditable and free from discrimination.

Similarly, LLMs demand:

- Transparency: Just as banks document how risk models generate credit scores, institutions must track how LLMs make decisions.

- Bias mitigation: Financial models are tested for fairness to prevent discrimination in lending. LLMs must be evaluated for biases that could lead to regulatory or ethical concerns.

- Change control:Traditional financial models require versioning and strict change management. The same discipline must apply to LLMs to ensure updates don't introduce new risks.

- The key takeaway: While LLMs operate differently, the governance mindset remains the same—rigorous validation, continuous oversight and accountability are non-negotiable.

Why LLM governance is different

Traditional financial models work with structured data and predefined rules. LLMs, on the other hand, are probabilistic and non-deterministic. The same input can yield different outputs, making verification more complex.

Additionally, LLMs introduce unique security risks. While financial risk models operate in controlled environments, LLMs can be manipulated through adversarial attacks, prompt injections or unintentional data leaks. Their massive scale and opaque decision-making processes make explainability and control far more challenging than before.

The growing governance gap

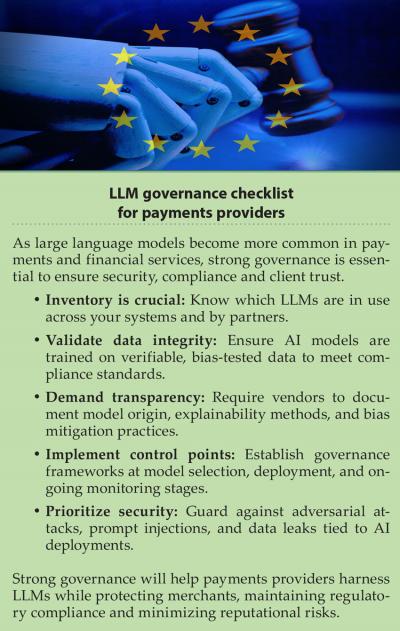

Many organizations don't know which LLMs are being used, where they're deployed or what risks they pose. Without strong governance, institutions risk compliance failures, reputational damage and security breaches.

Key governance challenges include:

- Model discovery: Identifying all LLMs in use across the organization.

- Risk evaluation: Assessing biases, vulnerabilities and data integrity.

- Policy enforcement: Defining clear adoption and usage standards.

- Security controls: Blocking high-risk models and mitigating threats.

Moving forward with stronger governance

Start by building an inventory through tools that allow you to discover the pre-trained AI models already used within systems. Next, in collaboration with stakeholders, decide on the organizations' risk tolerance and create policies to enforce it, outlining the specific criteria all AI models must meet before they can be used within applications.

Next, implement governance controls at the point of model selection. Using tools to evaluate open-source models based on security, quality and compliance can mitigate risk before deployment. For existing models, financial institutions must build an inventory, establish strict policies and implement automated enforcement mechanisms.

And finally, implement technologies that warn when policies are violated, and allow you to block high-risk models from being introduced into your environment. Ultimately, robust LLM governance isn't just a regulatory necessity—it's essential to ensuring AI remains an asset, not a liability.

By applying the same discipline used in traditional model governance, financial institutions can harness LLMs safely and effectively.

Karl Mattson is known globally as a cybersecurity innovator with over 25 years of diverse experience as an enterprise CISO, technology strategist and startup advisor across technology, retail and financial industry verticals. He serves today as the CISO for Endor Labs, a startup focused on open source software and software supply chain security. Contact him via LinkedIn at linkedin.com/in/karlmattson1.

Notice to readers: These are archived articles. Contact information, links and other details may be out of date. We regret any inconvenience.